ruby’s unexpected i/o vs cpu unfairness

Recently, while benchmarking a test application, I observed that if I mixed threads doing input/output (such as reading files or network/database requests) and threads doing CPU work, the I/O work would be slowed down massively. It took me a while to realize this was caused by how (and when) Ruby switches between threads.

For instance consider querying a web server (here I’m using google as an example) as fast as possible:

require 'net/http'

require 'benchmark/ips'

def perform_request

Net::HTTP.start('www.google.com', open_timeout: 0.5, read_timeout: 0.5, write_timeout: 0.5) do |http|

http.get('/')

end

end

Benchmark.ips do |x|

x.config(time: 1, warmup: 0)

x.report("request") { perform_request }

endWhen I run this example on my current wifi, I get:

$ bundle exec ruby io-cpu-unfairness-1.rb

Calculating -------------------------------------

request 16.571 (±12.1%) i/s - 17.000 in 1.039825s I get an OK-ish 60ms per request, which means I do a little over 16 such requests in a second.

Now, what happens if I add a background thread that is crunching some data while I’m trying to reach out to google?

require 'net/http'

require 'benchmark/ips'

Thread.new do

Thread.current.name = 'cpu-heavy'

foo = 0

while true

foo += 1

foo = 0 if foo > 1_000_000_000_000

end

end

def perform_request

Net::HTTP.start('www.google.com', open_timeout: 0.5, read_timeout: 0.5, write_timeout: 0.5) do |http|

http.get('/')

end

end

Benchmark.ips do |x|

x.config(time: 1, warmup: 0)

x.report("request") { perform_request }

end$ bundle exec ruby io-cpu-unfairness-2.rb

Calculating -------------------------------------

request 1.664 (± 0.0%) i/s - 2.000 in 1.201622s Suddenly, performance takes a big dive and we don’t even get through more than a single request per second.

What’s going on here?

Using the gvl-tracing gem (on Ruby 3.2), we can actually visualize what’s happening.

Compare the thread timelines for both examples:

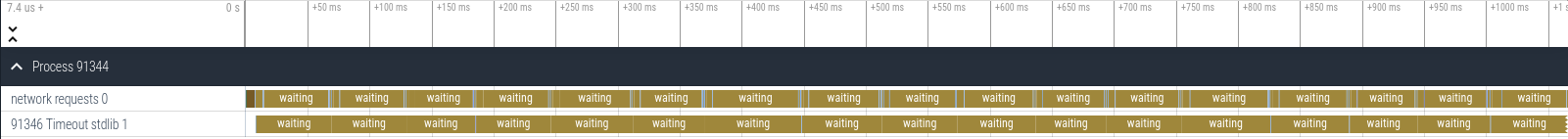

Figure 1: Timeline for fast example.

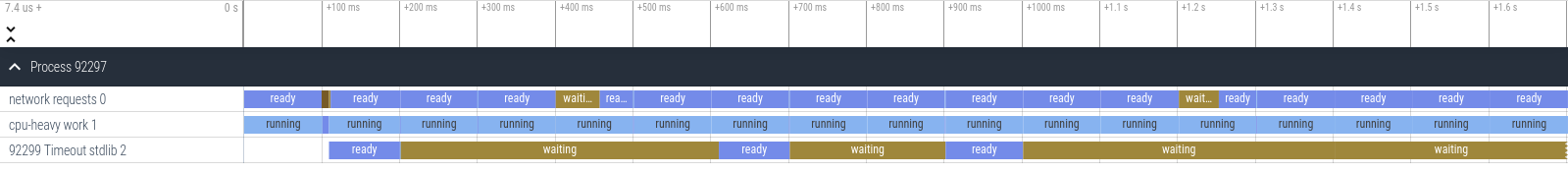

Figure 2: Timeline for slow example (with a background thread consuming CPU).

This is in fact caused by Ruby’s Global VM Lock (GVL), and the way Ruby decides when to switch between several threads. Whenever a thread is going to block waiting for some reply, and there are other threads wanting to run, Ruby will switch to them. But if a thread never blocks, then Ruby will give it around 100ms to run before forcing a switch.

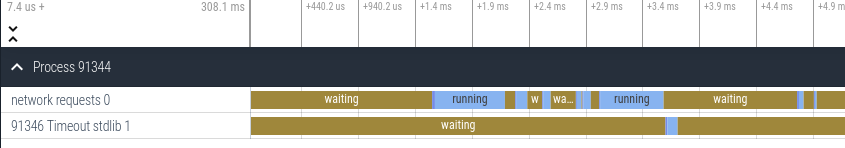

This explains the difference between figures 1 and 2: In figure 1, the "network requests" thread spends most of its time waiting on network requests, and if we zoom in (figure 3, below), we see that when a reply is available, the thread immediately wakes up and issues the next network request.

Figure 3: Zooming in on the fast example.

In the figure 2, the "network requests" thread spends most of its time in the ready state — that is, it’s ready to continue working, but it must wait for the cpu-heavy thread to finish its own 100ms.

This behavior ends up being quite unfair for a thread doing I/O work. That thread may only work for a few milliseconds before blocking, but once it blocks it must wait for all other threads to have their own go, which at 100ms per thread can take a long time.

The takeaway here is, be careful when mixing input/output (such as reading files or network/database requests) and CPU work: suddenly your I/O will be slower and it may be hard to understand why. This can happen if you’re using a multi-threaded webserver, such as puma, or a multi-threaded job queue such as sidekiq.

Edit: If you know that some block of Ruby code will be CPU intensive, then as @maciejmensfeld suggested, you can use Thread#pass to tell the Ruby scheduler to give other threads a chance before the 100ms period is up.